Companies that don’t create a full AI strategy plan risk lagging behind rivals. To succeed with generative AI, you need to plan your AI strategy carefully. This plan should match your business goals and set clear, measurable targets. Furthermore, a generative AI strategy plan acts as the base for all AI projects, helping companies stay flexible when under pressure. This process involves assessing your current technology setup, ensuring effective data management, and assembling the appropriate team to develop your AI strategy.

We think that building a successful generative AI plan isn’t just about the tech—it’s about making a complete system where business goals, tech skills, and human know-how come together. In this article, we’ll look at why most strategies don’t work and give you a practical plan to help your company succeed where others have failed.

Lack of Strategic Alignment with Business Goals

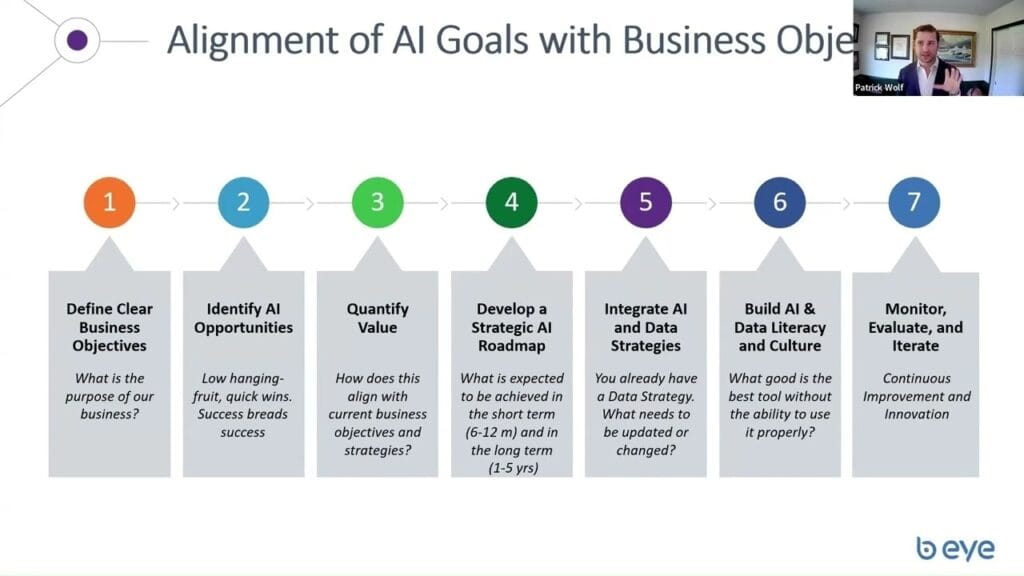

Image Source: LinkedIn

“The future belongs to those who understand that doing more with less is compassionate, prosperous, and enduring, and thus more intelligent, even competitive.” — Paul Hawken, Environmentalist Entrepreneur, and Author

A whopping 70% of executives confess their AI strategy doesn’t match up with their overall business goals. This mismatch is the main reason why most generative AI projects don’t live up to expectations. While 89% of leaders see AI as crucial for staying competitive, 28% say their AI plans closely tie in with their business objectives.

AI Goals and Business KPIs Don’t Line Up

Old-school key performance indicators (KPIs) often fail to give leaders the insights they need, which hurts both day-to-day operations and long-term plans. Companies set KPIs to measure progress on various business fronts but often forget to link these numbers with their AI efforts.

As a result, companies face what Forrester calls “outer misalignment”—when data scientists use stand-in variables that don’t represent real business goals. This difference between stand-in metrics and actual business aims causes basic disconnects in AI development. Furthermore, businesses often struggle with organizational misalignment: “Different departments have many incentives and aim for different KPIs.”

34% of companies now use AI to make new KPIs, but 9 out of 10 managers in that group agree their KPIs have gotten better with AI’s help. Companies that do well with AI focus more on business metrics than money metrics, using specific attribution models for each use case.

Not Setting Clear Goals for GenAI Success

Lack of clear methods to measure success accounts for about 70% of failed AI projects. Many companies jump on the generative AI bandwagon because they think it’s cutting-edge or because their rivals are doing it—not because it’s part of a well-thought-out plan with specific aims.

When bringing in generative AI, KPIs still play a key role in assessing model performance, lining up projects with business targets, making data-based tweaks, and showing the overall worth of the project. However, the majority of businesses continue to utilize the same math-based quality metrics from earlier AI technology, thereby overlooking crucial metrics that indicate the effectiveness of the system and its user base.

BCG’s study shows that just 22% of companies have moved past the proof-of-concept phase to create some value from AI, while 4% are generating significant value. Companies need to set clear success metrics during the research phase, measuring things like better productivity (mentioned by 51% of executives) and improved customer experience (50%).

Too Much Focus on Tech, Not Enough on Business Value

Many companies tackle AI implementation backwards—they start with the tech instead of business goals. Thomson Reuters’ AI strategy proves that successful implementation starts with clear business objectives, not tech experiments. Companies should first pinpoint specific business needs or opportunities they want to address and then pick suitable tech solutions.

Companies pour a lot of money into AI but often forget to track how much money and time it saves them. A survey shows that 61% of CIOs worldwide think AI pays off by boosting revenue, and 60% say it’s worth it because it saves time. However, 32% measure both these benefits.

Top companies stand out by zeroing in on their main business processes and support roles. They set big goals, like aiming to improve productivity by $1 billion. These firms focus more on their people and how they work rather than just the tech and fancy math. They don’t just chase new technology; instead, they use AI to both cut costs and make more money.

To tackle these issues, companies need to set up a system that starts by pinpointing key business aims before linking AI projects straight to these targets. This way of lining things up makes sure AI work has a real impact and steers clear of turning into pricey tests that don’t do much.

No GenAI Growth Plan and Roadmap

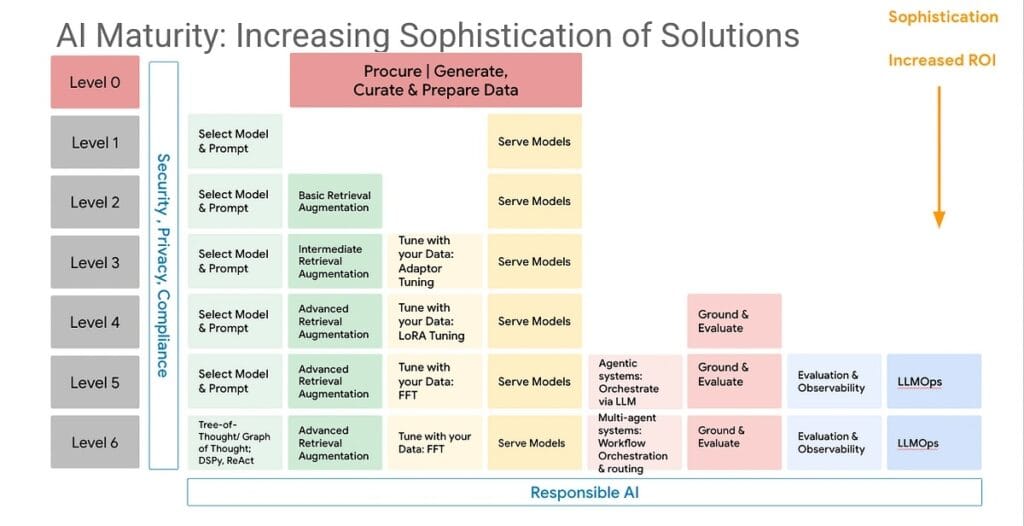

Image Source: Ali Arsanjani—Medium

Studies reveal that a whopping 90% of companies are still in the early phases of GenAI adoption, either testing concepts or building skills in small isolated groups. This key gap in assessing maturity weakens even the most ambitious frameworks for generative AI strategies.

No Starting Point for Data and Analytics Skills

Companies often jump into using generative AI without first figuring out where they stand in terms of their current abilities. To plan any successful AI strategy, you need to take a close look at how ready your company is when it comes to tech setup, IT skills, and areas where you need more expertise. This checkup becomes even more important when dealing with game-changing tech like generative AI.

Most companies don’t have well-organized or centralized data to use in retrieval-augmented generation. If you don’t know where you’re starting from, you can’t measure your progress. To assess your data, you need to spot gaps in how you collect, store, and manage it as well as check your quality standards and rules. Please ensure you thoroughly prepare before utilizing any generative AI. To put it another way, companies that develop AI solutions without first conducting a thorough assessment are akin to individuals constructing tall buildings on unstable foundations.

No Clear Plan for AI Use Cases

Companies often don’t have a good way to decide which generative AI projects to focus on. This leads to wasted resources and poor results. One boss put it this way: “It’s better to have a few big-impact projects than several small ones.” Still, many businesses try to do too many AI projects at once without a clear game plan.

To create a winning AI strategy, companies need to:

- Log all AI projects in a central hub where frameworks help assess their value

- Run exercises to rank use cases and spot high-potential AI challenges

- Set up feedback systems to check if initial value predictions were right

Businesses should know that poor ranking leads to what one study calls “a few AI projects in the mix that seem AI-related but don’t fit the strategy.” Ranking isn’t just about what’s possible—it’s about matching the strategy and how much it could help the business.

Lack of Step-by-Step Goals in AI Strategy Planning

Unlike typical tech rollouts, building generative AI has an impact on unpredictability and fast-changing data sets. Still, companies often plan AI projects with strict deadlines instead of setting up step-by-step goals.

To succeed in implementing generative AI, you need detailed plans that show the order of tasks, how to use resources, what depends on what, and flexible targets. Since AI development involves learning, companies need to keep tweaking their algorithms as data changes. Furthermore, businesses should set up ongoing ways to get feedback to keep everyone in the loop during development.

Using an agile approach to plan AI strategies tackles the unique problems of generative technology. As data sets change in quality and relevance, working in cycles lets teams quickly adjust, improve models, and add new data. Companies without feedback systems may use outdated or useless solutions, regardless of their initial investment.

Poor Technical Setup for GenAI Tasks

Image Source: Forbes

The current state of industry readiness indicates that 14% of organizations possess the necessary infrastructure to deploy AI systems immediately. The technical unpreparedness of organizations poses a major challenge to executing generative AI. An error occurred while processing your request. Please try again.

Weak Computing Power for LLMs

The computational needs of large language models (LLMs) create big infrastructure hurdles. Training cutting-edge LLMs needs huge computing power, with one top-tier GPU like the H100 priced at over $30,000. Furthermore, training LLMs can consume 1,287,000 kilowatt-hours of energy, resulting in approximately 552 tons of carbon emissions.

Memory needs pose a big challenge, too. A model containing 13 billion parameters at 16-bit precision requires over 24 GB of GPU memory just to load variables, with total needs often going beyond 36 GB for one instance. The IDC predicts that the global infrastructure market for AI will grow from $28.10 billion in 2022 to $57.00 billion by 2027.

Lack of Modular and Scalable Architecture

Good AI strategy development needs frameworks you can build on. These should let you add more machines or upgrade your current hardware. Above all, these systems must work well no matter how much the workload changes.

A buildableAI setup should have three main parts:

- A data handling layer to take in, clean up, and store information

- A model creation layer to make and test AI models

- A rollout layer to put things into action

Without this building-block approach, companies run into big problems when they try to grow their AI use. The biggest issue is that they can’t handle more data or different kinds of data without messing up what they’re already doing.

Too Much Reliance on Old Systems

Old systems pose big challenges to putting AI plans into action. In fact, 67% of businesses say outdated tech holds back their AI use, making tech debt a real problem. A study from 2024 found that this tech debt has a $1.52 trillion impact on the US economy.

These old systems often have bulky designs and isolated data, so they can’t handle the huge amounts of info and quick processing that modern AI needs. Their stiff setup and lack of standard connection points make it difficult to add new AI tools. This means bringing in generative AI becomes tricky, slow, and expensive.

Companies that don’t update their tech infrastructure face serious problems: they miss chances to grow, take longer to launch products, and fall behind in AI capabilities. Updating old systems has become essential to prepare for AI—unresolved legacy issues will slow down or stop AI strategy development.

Poor Data Management and Ethical Oversight

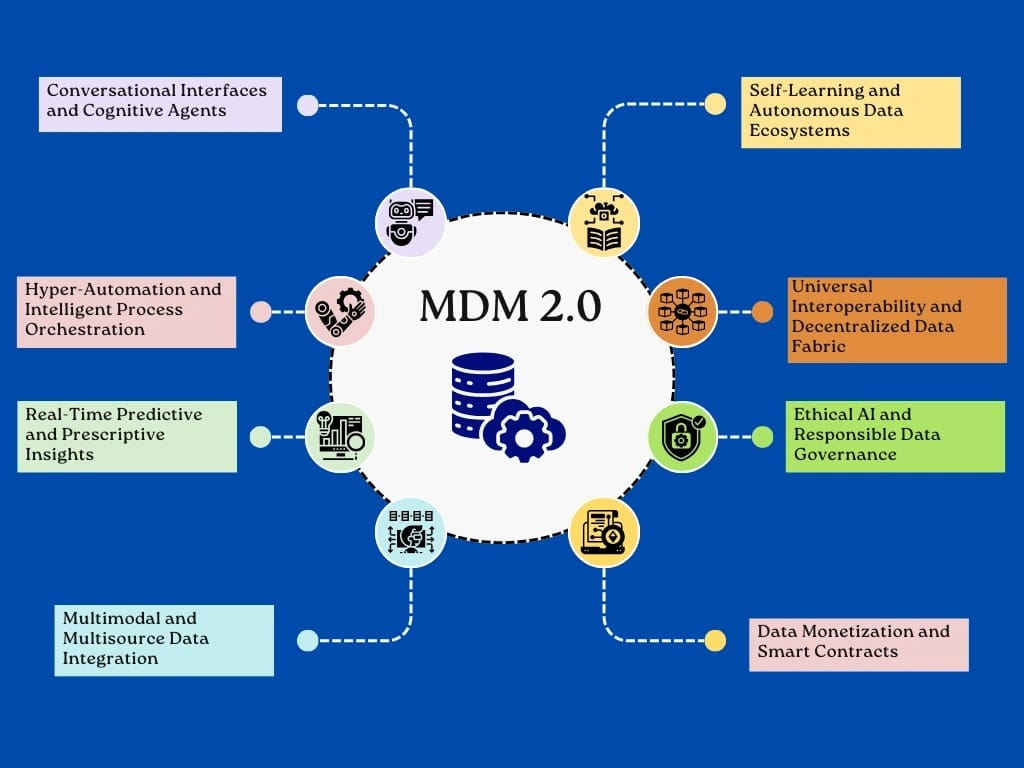

Image Source: The AI Journal

Businesses lose about $15 million each year because of bad data management, but most AI strategy plans ignore this key issue. The difference between regular data management and what AI needs creates big risks that can ruin even well-designed AI projects.

No AI-Specific Data Governance Framework

Old-school data governance built for regular reports and fixed databases doesn’t cut it for AI’s special needs. AI that creates content needs its own rules to handle its ever-changing nature. This approach includes auto-checks on quality, real-time fact-checking, and non-stop compliance reviews. Without AI-specific rules, these systems are vulnerable to errors, unfairness, and conflicting messages. This special setup needs to go beyond just keeping data safe. It should cover the entire life of data in AI models, from its source to its cleanup to its ethical issues.

Unclear Ownership of Data Quality and Access

Creating a strong AI strategy requires clear rules about who owns what data. Currently, 35% of business leaders set up data management plans to boost data quality, but it’s often unclear who’s responsible for what. Many companies struggle with accountability as AI systems raise tricky questions about where data comes from, if it’s trustworthy, and how to protect privacy. This dilemma gets even trickier with AI that can create new content, since its flexible interfaces might let in unexpected sensitive info. This flexibility can lead to security risks where private data ends up in neural networks without anyone noticing.

No Clear Ethics Rules for Using AI That Creates Content

Companies face growing risks to their reputation, regulations, and legal standing when they don’t oversee the ethics of using generative AI. Some big cases show this: LA is suing IBM, claiming it took data through its weather app. Goldman Sachs is under investigation for an AI system that might have mistreated women. Furthermore, AI can create unfair content due to its creators’ biases, biased training data, or how the AI itself understands information. Since AI continues to change, ethical rules need to balance new ideas with keeping people’s information safe. Companies should set up AI ethics boards to watch over big projects. They should also make clear plans to deal with possible bias by checking their systems often and being open about how they handle data.

Not Planning for Talent and AI Knowledge

“21st-century illiterates will be those who can’t learn, unlearn, and relearn.” — Alvin Toffler, Futurist and Author

A surprising 70% of AI-literate workers see AI technology as promising, while 29% of those with low literacy share this view. However, the human aspect of generative AI strategy remains unexplored, with less than half of companies having proper talent frameworks to implement.

AI Engineers and Data Architects Lack Clear Job Descriptions

To implement generative AI, companies need four key talent types:

- Business process experts who grasp real-world results

- Machine learning specialists ensuring suitable methods

- Data specialists who understand quality and accessibility

- Architecture experts who design AI systems

AI architects act as the link between data scientists, engineers, developers, operations teams, and business leaders. Most organisations tackle talent acquisition instead of Companies focusing on developing AI strategies should make internal mobility a priority, as employees stay 41% longer in organizations that hire from within.

Not Enough Training to Engineer Prompts and Use LLMs

Prompt engineering is now as crucial as knowing Microsoft Office in today’s workplaces. So, employees who want to boost their AI skills are asking for structured training more than anything else. But most companies provide basic resources like articles and videos even though hands-on practice works much better.

Companies need to set up clear AI rules that show what’s okay to use while still letting people try things out without much risk. If they fail to do so, they may find themselves among the 30% of generative AI projects abandoned after the testing phase.

Companies Aren’t Ready to Create AI Strategies

AI literacy doesn’t come from top-down orders or required online classes—it grows when people get curious and try things out. Often, workers know more about AI than their bosses from hands-on use. This knowledge gap explains why 92% of organizations need to change to AI-first ways of working by 2024 to keep up with rivals.

Good AI plans need both a ready culture and tech skills. Companies should create places where staff see AI as a helpful tool, not a job taker. In the end, to use generative AI well, you need talent plans that both train current teams and hire experts for special jobs.

Conclusion

Building an Effective Generative AI Strategy Framework

In this article, we looked at why most strategies for generative AI don’t work even when companies spend a lot of money. The main problem is that businesses treat AI like it’s just about technology, not about changing how the whole company works.

To make a generative AI strategy that works, you need to line up with five key areas. The most important thing is to set clear goals for the business and ways to measure success before picking any tech solutions. If you don’t conduct thorough research beforehand, AI projects may become costly and ineffective experiments.

Furthermore, it’s crucial to check how ready your company is. Companies must be honest about their current capabilities, focus on the most impactful uses, and set up adaptable step-by-step goals as technology improves.

Technical infrastructure is a key part that people often miss. Old systems, weak computers, and rigid setups create big problems even for successful AI plans. So, companies need to invest in flexible, modular systems built for AI tasks.

Data control and ethics checks also need special focus. Regular control methods don’t work for AI systems that need quick checks, clear ownership, and full ethics rules. Companies that ignore these aspects face significant risks to their reputation, compliance with regulations, and legal standing.

How you handle talent is the human base for successful AI projects. Companies need to set roles for AI experts while also teaching AI skills to all workers. This readiness in company culture is just as key as tech skills.

We think companies that tackle these five areas as a whole will do well where others have come up short. Definitely, AI that creates has giant potential to bring new ideas and gain an edge over rivals. But these good things happen when business goals, tech skills, data rules, and human know-how come together in one big plan.

Businesses that just run after the latest tech will keep joining the 71% that don’t hit their ROI targets. On the flip side, those who build full systems linking plans, skills, tech setup, rules, and people will certainly turn AI that creates from a hot topic into real business worth.

FAQs

Q1. What are the reasons many generative AI plans fail to achieve their desired results?

Many generative AI plans don’t work because they don’t match business aims, lack proper tech setup, have poor data rules, and ignore growing skills. Companies often pay too much attention to the tech itself instead of looking at all these important parts together.

Q2. What are the main hurdles in using generative AI?

Big challenges include not lining up with business goals, not checking how ready the company is, not having the right tech to handle AI work, weak rules for data use and ethics, and forgetting to plan for talent and teach everyone about AI.

Q3. How can businesses boost their odds of doing well with generative AI?

Businesses can boost their odds of winning by tying AI projects to specific company goals, doing full readiness checks, putting money into systems that can grow, setting up strong rules for data use, and making a complete plan for talent that covers both expert jobs and company-wide AI knowledge.

Q4. How does data quality affect generative AI success?

Data quality is key to generative AI success. Bad data leads to untrustworthy results and can keep biases going. Companies need to set up AI-specific rules for handling data, make clear who owns what data, and make sure they have high-quality representative data sets to train and run AI models.

Q5. How important is organizational culture when adopting generative AI?

Organizational culture plays a crucial role in adopting generative AI. Companies need to create an environment where employees see AI as a powerful tool instead of a threat. This involves giving hands-on training, pushing for experimentation, and building a culture of ongoing learning to keep up with fast-changing AI technologies.